Integrating framework components and business platforms in SOFIE

In the context of SOFIE, different IoT business platforms are being developed that are based on the IoT federation framework defined in deliverables D2.4 and D2.5. For this, an IoT framework repository, consisting of various components, adapters for well-known IoT platforms and security mechanisms is developed. These components can be used to create business platforms, including those for the four SOFIE real-world pilot use cases.

LMF Ericsson is responsible for developing an integration platform, accessible at https://ci.sofie-iot.eu/jenkins, to be used by the framework components and the business platforms developers. The purpose of the platform is multifold:

- Deliver the available SOFIE software components for evaluation in Working Package 4 (WP4) in accordance with deliverable D4.1

- Integrate available SOFIE software components for pilots usage

- Provide quality control mechanisms for the WP2 framework components that are available in public software repositories for anyone to use

- Deliver a complete CI/CD environment in the AWS public cloud for SOFIE users

- Deliver the CI/CD processes and methodology for SOFIE users

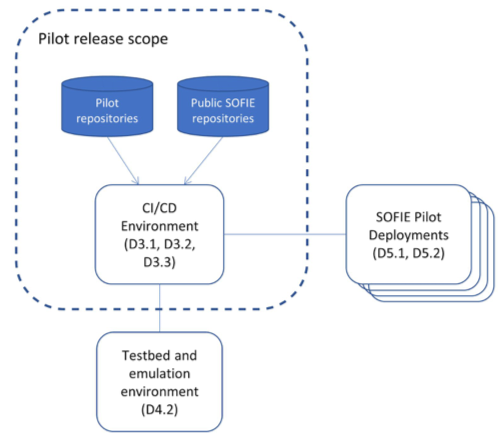

Figure 1: relevant deliverables related to the integration platform.

Components onboarding

One of the reasons of providing CI-as-a-Service to the developers of different pilot software components and framework components is to increase the overall quality of the software components produced. By owning and managing the CI environment, LMF Ericsson is able to take advantage of its expertise in the field and offer a set of additional services that might not be available to the pilots in their testing environments, thus increasing the overall quality of the components code, e.g. by reducing the number of bugs that might affect the functionalities of the component, once made publicly available to its users. In the same fashion, by hosting the entire CD runtime, LMF offers a Platform-as-a-Service environment by deploying all the frameworks and pilots components on the infrastructure it owns and manages.

Several components have been planned to be integrated into the CI/CD pipeline. Some of the components are developed by SOFIE pilots. Others are developed by WP2 to either be integrated into the different pilots, upon proper customisation depending on the needs of each pilot, or to be deployed as standalone components. On-boarding a component onto the CI/CD pipeline requires the cooperation between LMF and the developer of the component.

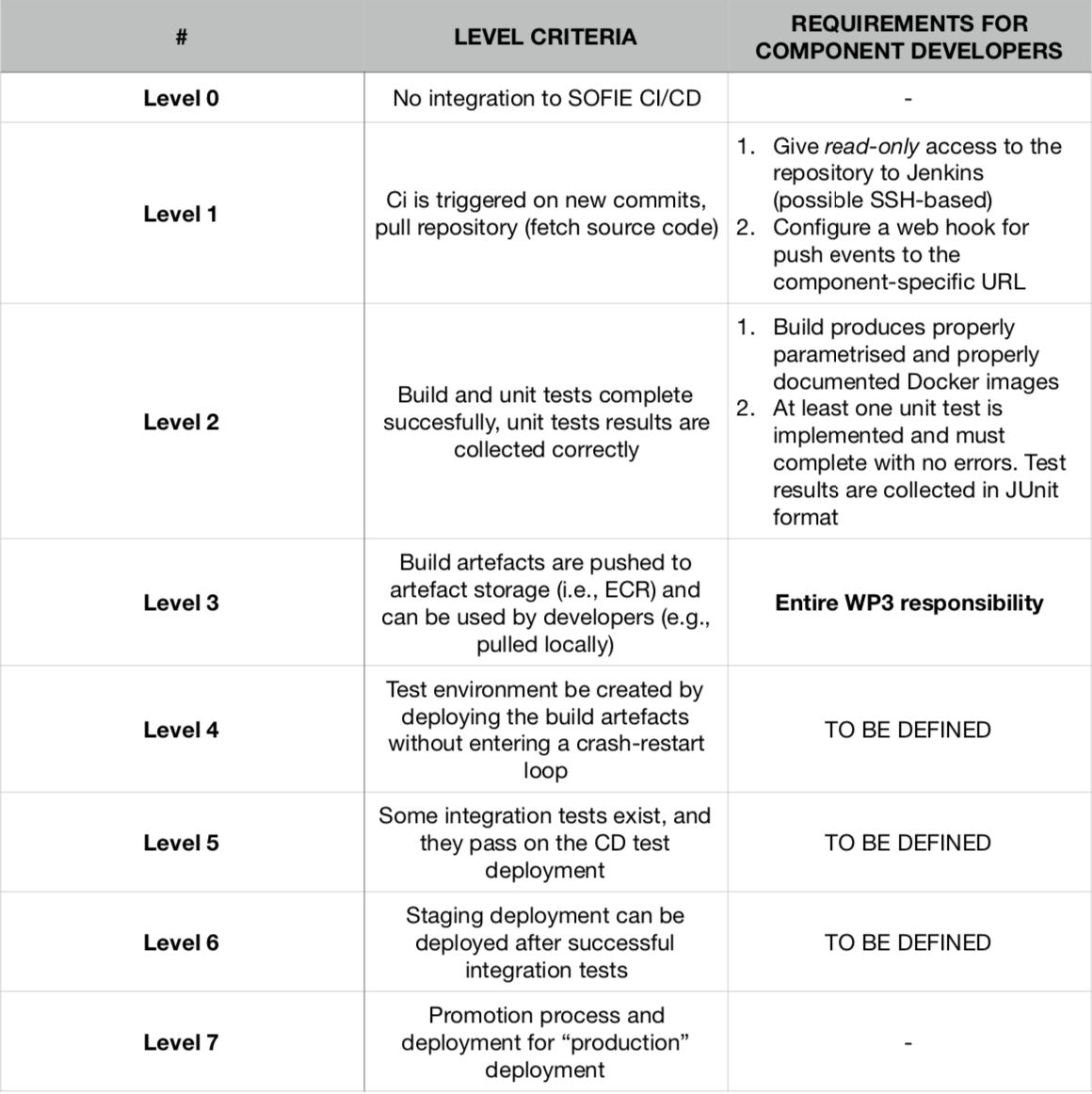

At any given time, a component can be in one of the seven maturity levels, as shown in Table 1. Levels 1-3 are relative to the CI maturity, while levels 4-7 indicate the maturity of the component with regard to the CD environment. Any component must meet the full CI maturity level (level 3) before proceeding to CD integration (level 4 onwards).

Table 1: onboarding maturity levels

Specifically, for the CI maturity levels, component developers are responsible for:

1. Giving the CI agent read-only access to the component repository either via SSH-based (preferred) or HTTPS-based authentication.

2. Configuring webhooks to trigger new builds in the CI environment whenever new commits are pushed on a branch specified in the integration documentation. The completion of steps 1 and 2 promotes the component to maturity level 1.

3. Defining the component build process in a way that produces Docker images as final artefacts that are parametrized with regard to potential hard-coded IP addresses and port numbers. The tagging details of the Docker images must be specified in the integration documentation.

4. For those artefacts, writing unit tests that are collected in JUnit format in a path that must be specified in the integration documentation. Fulfilment of steps 3 and 4 promote the component to maturity level 2. Nevertheless, in case of failure of either the build process or the unit tests, the component cannot be taken to step 3, since the artefacts will not be pushed onto the remote artefact repository.

Taking a component from level 2 to level 3 is entirely the responsibility of LMF, which will create the required ECRs, or Elastic Container Registries, on AWS. The registries have the proper permissions so that only the LMF staff is able to access them in read-write mode and the component developers in read-only mode. Once the required ECRs are created, the CI build process pushes the artefacts there so that the CD pipeline can be triggered and can pull the new artefacts.

Even though maturity levels have been defined also for the CD pipeline, the process to take components from level 3 to level 4 and further has not yet been defined. They will be defined step-by-step by constantly exchanging feedback with the different developers to achieve the best trade-offs in terms of security and reliability of the CD environment.

CI and CD infrastructure

The core capabilities the environment provides are:

1. Perform continuous integration tasks when triggered by changes in watched repositories of SOFIE WP2 and WP5 material. Supports multiple repositories and build pipelines.

2. Perform continuous delivery tasks, including automated integration tests, and deployment to staging and production environments. Supports multiple pipelines, e.g. for each pilot separately.

3. Support per-pilot integration needs by being able to provide servers for pilots to configure any necessary gateways etc. used during integration testing, staging and production deployments.

4. Enough flexibility in CI/CD and environment to make it reasonably feasible to automate various evaluation tasks, and/or manual validation and evaluation tasks.

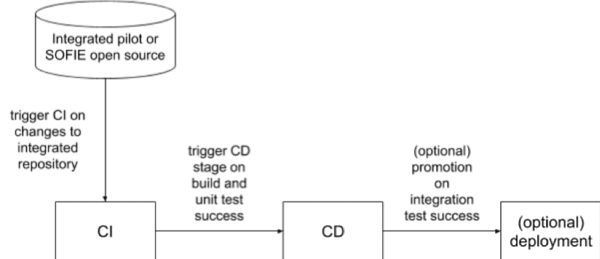

The underlying infrastructure where the integration and evaluation environment is being deployed as part of WP3 is the Amazon Web Services (AWS) cloud environment. An EU region is used for the deployment. Figure 2 shows the interactions between LMF, the pilot components, and the CI/CD pipeline.Figure 2: Overview of how WP2 and WP5 components are integrated and deployed on the CI/CD pipeline.

CI architecture

This environment is not redundant and not designed for high availability, although it has been highly reliable so far. The underlying assumption is that while CI and CD processes are important, a failure due to the loss of a virtual machine, database etc. is an unlikely event, and, in the worst case, manual recovery through rebuilding of failed components will last only a week at most. The “infrastructure as code” approach for the environment setup allows easy and repeatable deployments of the complex multi-node integration environment. The Terraform tool is suitable for this purpose as it supports incremental deployments and parameterization of the deployments, including integration of externally managed resources into the deployment templates. In this model, the integration environment itself is described in the Terraform template language, and stored in a version-controlled source code repository. The templates contain specific environment information and may contain sensitive information, so these are not made public by default.

CD architecture

The deployment environment is separated from other infrastructure services (different subnets, different VPC, etc). The deployment process is driven by the Jenkins CD slave fleet and uses general Kubernetes deployment functions provided by AWS EKS. In the CI/CD environment access to the EKS subnets is limited to the CD slaves only. Deployed services are run in Docker containers orchestrated by AWS EKS (Kubernetes). EKS consists of a separate master cluster (EKS control plane) and a cluster of worker nodes. The master cluster orchestrates the cluster and provides the Kubernetes API endpoint while the worker nodes run the containers. The EKS control plane is managed by AWS to provide automatic healing creating a fully production ready platform. The worker nodes are an EC2 auto scaling group, which enables automated scaling for the cluster. In addition to Docker containers, deployments will be potentially supplanted by other temporary or persistent services (database, cache, pilot backend gateways, etc.).